Technology 1: AI/Machine Learning

Session: Technology 1: AI/Machine Learning

174 - Large Language Model Matches Physician Accuracy in Diagnosing Neonatal Conditions

Saturday, April 26, 2025

2:30pm - 4:45pm HST

Publication Number: 174.5723

Emma Holmes, The Mount Sinai Kravis Children's Hospital, New York, NY, United States; Caroline Massarelli, Icahn School of Medicine at Mount Sinai, New York, NY, United States; Courtney Juliano, Icahn School of Medicine at Mount Sinai, New York, NY, United States; Bruce D.. Gelb, Icahn School of Medicine at Mount Sinai, New York, NY, United States; Girish Nadkarni, Icahn School of Medicine at Mount Sinai, New York, NY, United States; Eyal Klang, Icahn School of Medicine at Mount Sinai, NY, NY, United States

Emma Holmes, MD (she/her/hers)

Assistant Professor

The Mount Sinai Kravis Children's Hospital

New York, New York, United States

Presenting Author(s)

Background: Physician generated diagnosis codes are known to be inaccurate, including among neonatal populations. These inaccuracies can limit the generation of reliable research cohorts, reduce hospital revenue, and negatively impact quality of patient care. Large language models (LLMs) excel in interpreting large volumes of text, as in electronic medical records (EMRs). LLMs such as GPT-4o have demonstrated improvements in the accuracy of physician-generated codes in the adult emergency department setting, suggesting their potential to enhance diagnosis coding for neonates in the NICU.

Objective: Evaluate the accuracy of GPT-4o in identifying common neonatal diagnoses in NICU patients from provider notes and compare it to physician-generated diagnoses.

Design/Methods: We randomly selected 50 infants admitted to the NICU between 2022-23 who did not require respiratory support, identifying them as an at-risk group for underdiagnosis. Using HIPPA compliant GPT-4o via Mount Sinai Hospital's (MSH) Microsoft Azure tenant common diagnoses were identified from provider notes. Diagnoses present in at least two notes were considered LLM-generated diagnoses to increase specificity. These were compared with physician-generated diagnoses extracted from the EMR. Two independent physicians reviewed all diagnoses for accuracy; consensus labels were discussed in the event of discordant labels. Non-specific diagnoses were noted but considered accurate unless it was too vague to recognize the condition being referenced (e.g. ‘Other specified health status’).

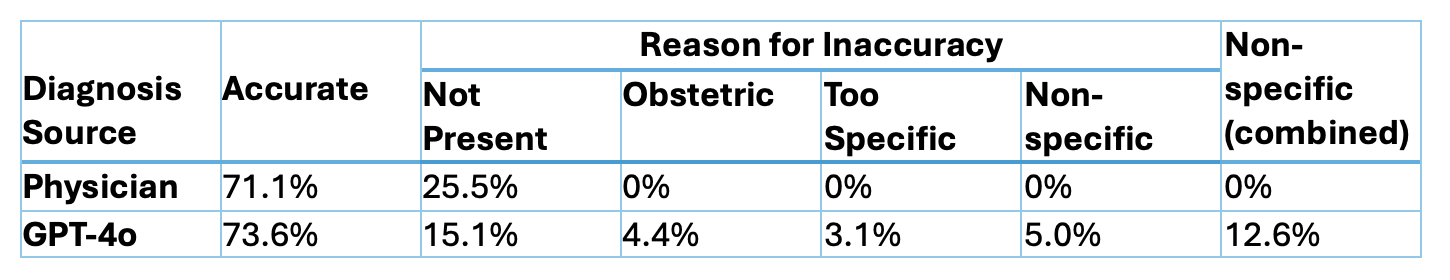

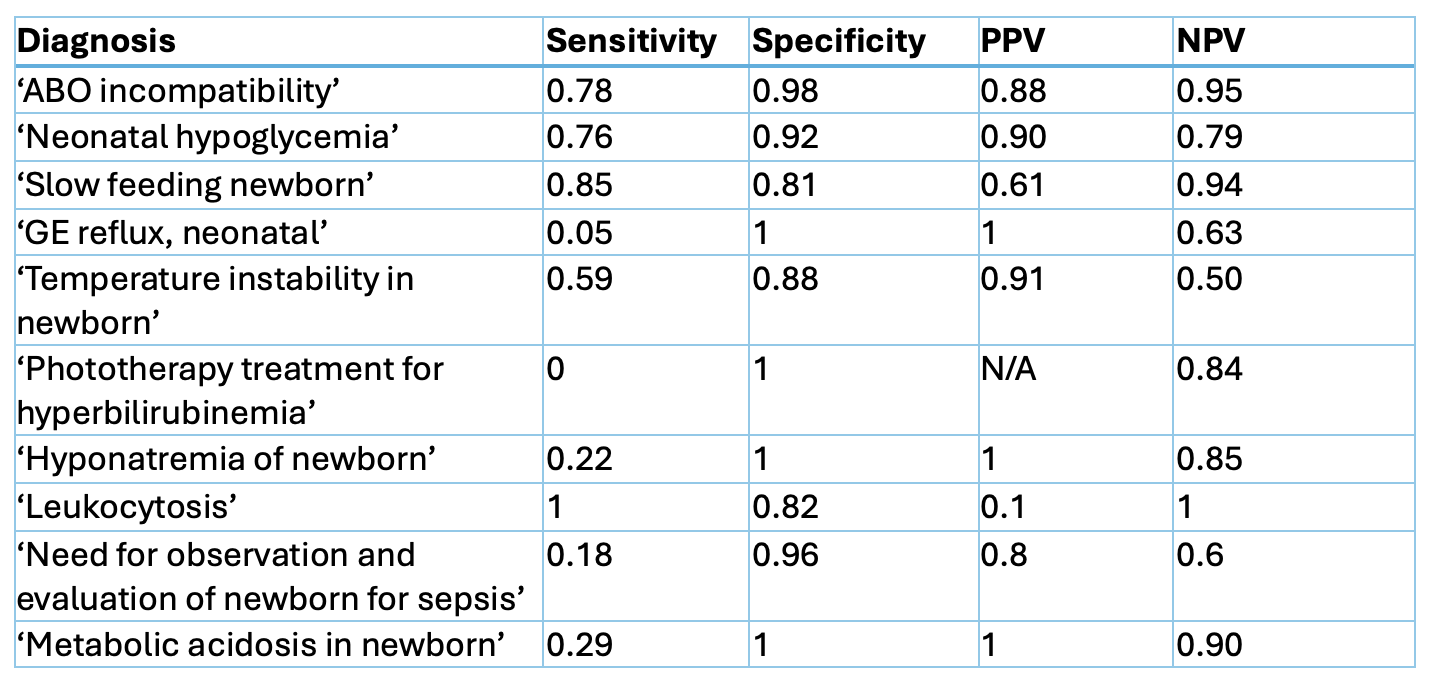

Results: GPT-4o achieved comparable diagnostic accuracy (73.6%) to physicians (71.1%) (Table 1). Sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) for GPT-4o in detection of each diagnosis are detailed in Table 2, except where the volume of the diagnosis was not adequate to calculate a metric. While GPT-4o achieved high specificity (ranging from 0.81-1), sensitivity varied (0-0.85) particularly when faced with subjective diagnoses, such as ‘GE reflux, neonatal’ and ‘Need for observation and evaluation of newborn for sepsis.’

Conclusion(s): GPT-4o matched overall physician accuracy in identifying neonatal diagnoses. This zero-shot approach achieved a high level of sensitivity and specificity for certain key conditions, such as ‘ABO incompatibility,’ ‘Neonatal hypoglycemia,’ and ‘Slow feeding newborn.’ Subjectivity in the diagnosis posed a greater challenge to the LLM. Further prompt engineering and use of retrieval-augmented generation (RAG) would likely improve the performance.

Table 1. Accuracy of GPT-4o vs Physician Diagnoses

Comparison of accuracy, reason for inaccuracy, and proportion of non-specific diagnoses between the physician and GPT-4o provided diagnoses. Diagnoses were noted for non-specificity if more specific diagnosis was available, and only considered inaccurate if lack of specificity interfered with ability to discern what condition the diagnosis was referring to.

Comparison of accuracy, reason for inaccuracy, and proportion of non-specific diagnoses between the physician and GPT-4o provided diagnoses. Diagnoses were noted for non-specificity if more specific diagnosis was available, and only considered inaccurate if lack of specificity interfered with ability to discern what condition the diagnosis was referring to. Table 2. GPT-4o performance for each prompted diagnosis

Accuracy metrics for GPT-4o by diagnosis. The following diagnoses are excluded from the table for insufficient volume in the sample: ‘Hypocalcemia and hypomagnesemia of newborn’, ‘Neonatal hypermagnesemia’

Accuracy metrics for GPT-4o by diagnosis. The following diagnoses are excluded from the table for insufficient volume in the sample: ‘Hypocalcemia and hypomagnesemia of newborn’, ‘Neonatal hypermagnesemia’