Technology 1: AI/Machine Learning

Session: Technology 1: AI/Machine Learning

177 - Non-Sensor Video-Based Pose Estimation Model for Automated Infant Movement Assessment

Saturday, April 26, 2025

2:30pm - 4:45pm HST

Publication Number: 177.5940

Mei-Jy Jeng, National Yang Ming Chiao Tung University, Taipei, Taipei, Taiwan (Republic of China); Yu-Cheng Lo, Taipei Veteran General Hospital, Taipei, Taipei, Taiwan (Republic of China); I-Fang Chung, Institute of Biomedical Informatics, National Yang Ming Chiao Tung University, Taipei, Taipei, Taiwan (Republic of China)

Mei-Jy Jeng, MD, PhD (she/her/hers)

Professor

National Yang Ming Chiao Tung University

Taipei, Taipei, Taiwan (Republic of China)

Presenting Author(s)

Background: Detecting neonatal and infant movement is crucial for monitoring developmental milestones and facilitating early intervention for potential disorders. Traditional sensor-based methods can be intrusive and impractical. In contrast, video-based pose estimation offers a non-invasive alternative, enabling the analysis of infant movements through visual data. By leveraging computer vision and machine learning, researchers can develop accurate pose estimation models that enhance the accessibility and efficiency of movement detection and general movement assessment, which may help support infant growth and development assessment in the future.

Objective: To create a video-based pose estimation model for automating the assessment of neonatal and infant movements without sensors.

Design/Methods: With IRB approval (IRB TPEVGH No.: 2018-11-001C), we enrolled infants aged 33 to 60 weeks post-menstrual age (PMA). We captured video recordings of 22 key points—facial features and limb joints—annotated frame by frame. The data was split into training, validation, and test sets (7:2:1 ratio). Annotations were converted to ground truth heatmaps, and face blurring ensured de-identification. We developed models using Simple Baselines, ResUNet, and HRNet, addressing discontinuities with an Infinite Impulse Response (IIR) strategy and excluding crying episodes by analyzing lip distances. We evaluated model performance using the percentage of correct keypoints (PCKs) and recall rates.

Results: We collected 267 videos from 195 infants, averaging 66 ± 58 days after birth and 44 ± 7 weeks PMA, generating 5189, 1230, and 780 images for training, validation, and testing. The model achieved a PCK@1 score of 0.929, with data augmentation enhancing accuracy and an average recall rate of 0.864. The Simple Baselines model reached over 90% accuracy for most keypoints, except the hip. De-identification did not significantly impact performance, and removing facial features maintained detection accuracy. The lip distance method effectively differentiated crying behavior.

Conclusion(s): In conclusion, this non-invasive video-based pose estimation model improves the monitoring of infant movements, eliminating traditional sensors. It serves as a valuable tool for neonatal and pediatric researchers and practitioners, enhancing assessment and intervention strategies in infant development, with potential for real-time application.

Characteristics of enrolled infants and PCKs for eack keypoint.

.jpg) Table 1. Characteristics of enrolled infants receiving video recording (Table 1-1) and the percentage of correct keypoints (PCKs) for each keypoint across three models (Table 1-2).

Table 1. Characteristics of enrolled infants receiving video recording (Table 1-1) and the percentage of correct keypoints (PCKs) for each keypoint across three models (Table 1-2).Overview of the algorithm’s process and the ResUnet architecture employed for infant pose estimation.

.jpg) Figure 1. Overview of the algorithm’s process and the ResUnet architecture employed for infant pose estimation. (A) Settings of video recordings. (B) An example of keypoint detection, is where the captured video frames are processed to illustrate manually corrected keypoints, forming a skeletal representation (stickbaby) of the infant. (C) Representation of the array of keypoints which indicates the x and y coordinates of each keypoint across multiple frames. (D) Schematic representation of the final model architecture that incorporates these keypoints for developing a robust pose estimation framework. (E) Schematic flow description of the ResUnet architecture employed for the infant pose estimation of the present study.

Figure 1. Overview of the algorithm’s process and the ResUnet architecture employed for infant pose estimation. (A) Settings of video recordings. (B) An example of keypoint detection, is where the captured video frames are processed to illustrate manually corrected keypoints, forming a skeletal representation (stickbaby) of the infant. (C) Representation of the array of keypoints which indicates the x and y coordinates of each keypoint across multiple frames. (D) Schematic representation of the final model architecture that incorporates these keypoints for developing a robust pose estimation framework. (E) Schematic flow description of the ResUnet architecture employed for the infant pose estimation of the present study. Overview of visualization for assessing keypoints of infants and the data collection methodology.

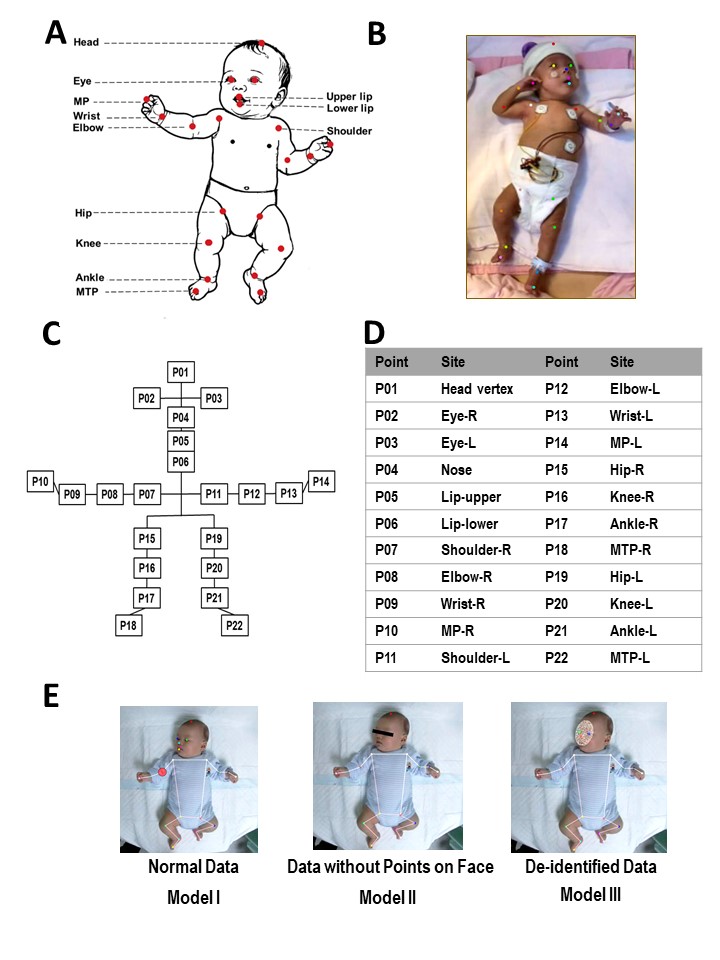

Figure 2. Overview of visualization for assessing keypoints of infants and the data collection methodology. (A) Schematic representation of the key anatomical landmarks of an infant model. (B) An example of a participant preterm infant displaying identified anatomical points using colored markers to indicate anatomical sites. (C) Diagram highlighting the relationships between anatomical points labeled P01 through P22. (D) Table listing each anatomical point (P01-P22) along with the corresponding anatomical site. Points are recorded for both right (R) and left (L) sides. (E) Demonstrations of three models: Model I, normal data with all points visible; Model II, data without face points; Model III, de-identified data.

Figure 2. Overview of visualization for assessing keypoints of infants and the data collection methodology. (A) Schematic representation of the key anatomical landmarks of an infant model. (B) An example of a participant preterm infant displaying identified anatomical points using colored markers to indicate anatomical sites. (C) Diagram highlighting the relationships between anatomical points labeled P01 through P22. (D) Table listing each anatomical point (P01-P22) along with the corresponding anatomical site. Points are recorded for both right (R) and left (L) sides. (E) Demonstrations of three models: Model I, normal data with all points visible; Model II, data without face points; Model III, de-identified data.