Technology 1: AI/Machine Learning

Session: Technology 1: AI/Machine Learning

171 - Comparison of Traditional Literature Searches and Large Language Models (LLMs) for Answering Pediatric Clinical Scenario Questions

Saturday, April 26, 2025

2:30pm - 4:45pm HST

Publication Number: 171.4115

Emma Albrecht, Phoenix Children's Hospital, Phoenix, AZ, United States; Rachel Kortman, Phoenix Children's Hospital, Phoenix, AZ, United States; Kathy Zeblisky, Phoenix Children's Hospital, Phoenix, AZ, United States; Rebecca Birr, Valleywise Health, Phoenix, AZ, United States; Anna Gary, Phoenix Children's Hospital, Phoenix, AZ, United States; Jessica Wickland, Phoenix Children's Hospital, Phoenix, AZ, United States; Joanna Kramer, Phoenix Children's Hospital, Phoenix, AZ, United States; Jaskaran Rakkar, Phoenix Children's Hospital, Phoenix, AZ, United States; Karen Yeager, Phoenix Children's, Phoenix, AZ, United States

Emma Albrecht, MD (she/her/hers)

PGY-2

Phoenix Children's Hospital

Phoenix, Arizona, United States

Presenting Author(s)

Background: Large Language Models (LLMs) present the opportunity for physicians to find quick answers to clinical questions. The accuracy and reliability of LLM-generated information in pediatrics are unknown.

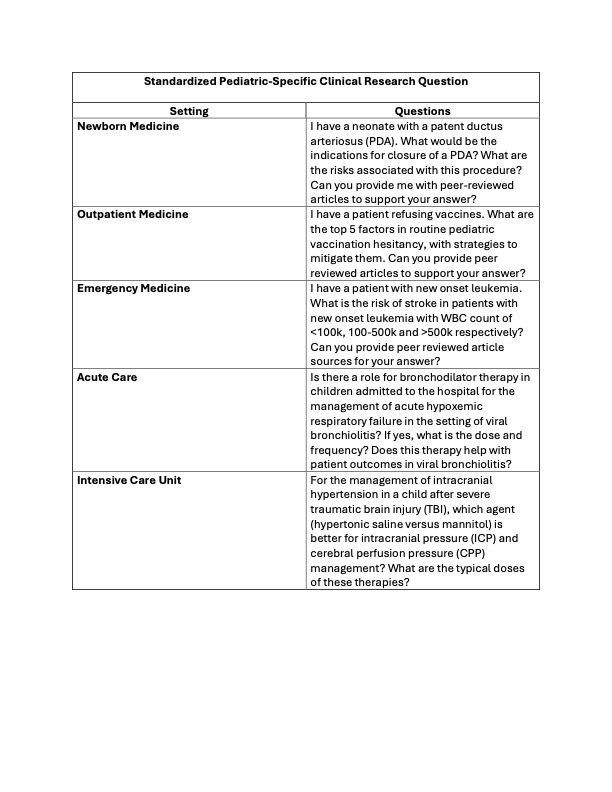

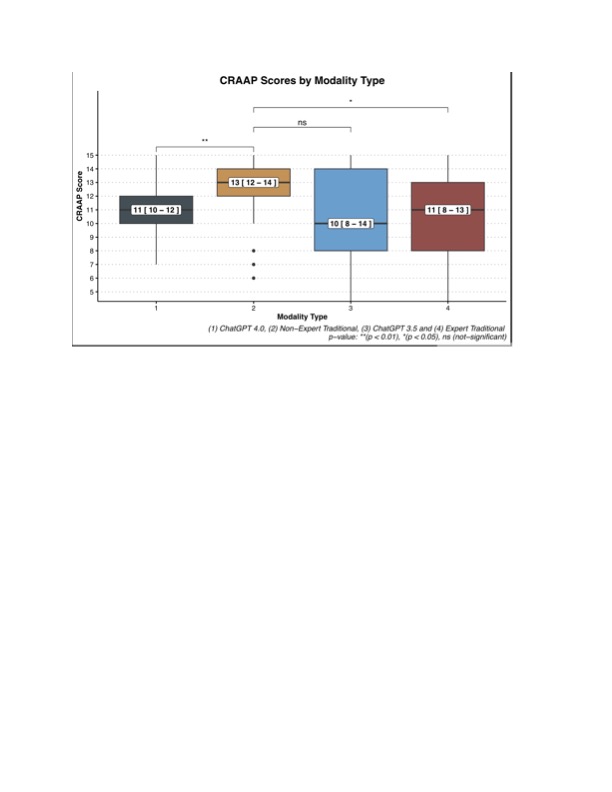

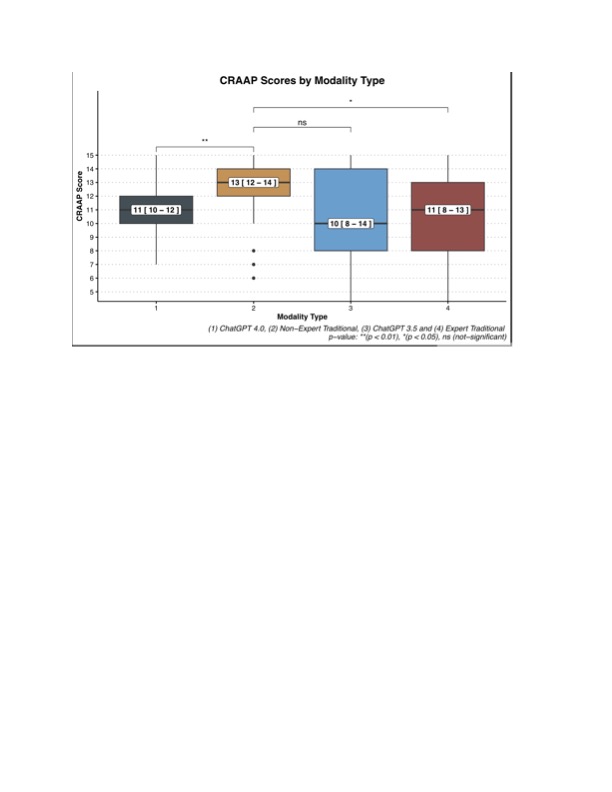

Objective: We aimed to assess the reliability of LLMs against established literature searches using the CRAAP score (Currency, Reliability, Authority, Accuracy and Purpose), a standardized evaluation method to determine the quality and reliability of a source for research purposes.

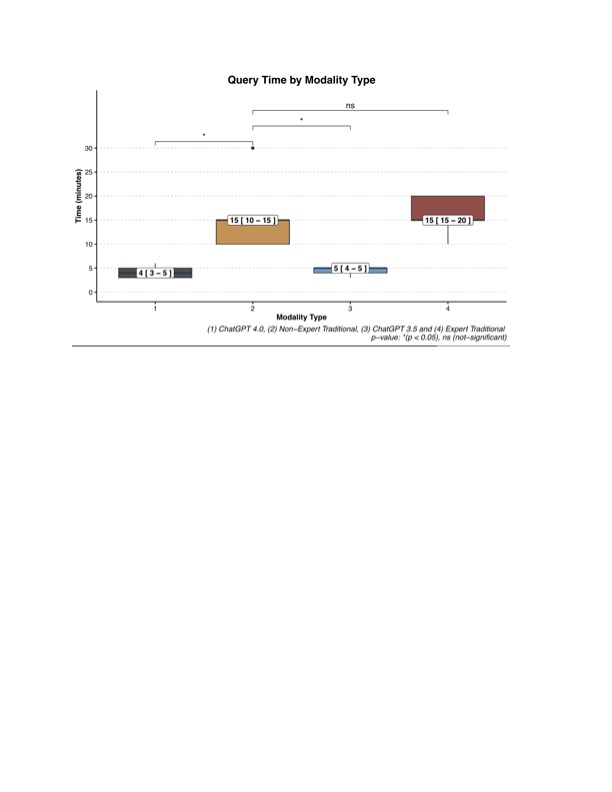

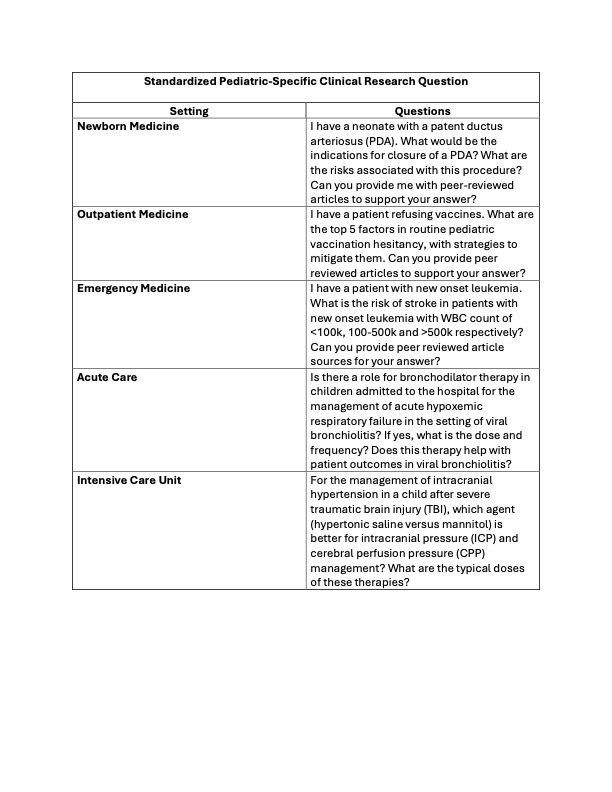

Design/Methods: Standardized pediatric-specific clinical research questions were developed to reflect clinical scenarios in five different pediatric settings (Table 1). Questions were then answered using four separate methods: (A) non-expert traditional search by a pediatric resident physician using PubMed and UpToDate, (B) an expert traditional search by a medical librarian using PubMed which were then summarized by the pediatric resident physician, (C) ChatGPT 3.5, and (D) ChatGPT 4.0. The time to answer each question by each modality was recorded. Answers were blinded and evaluated using the CRAAP scoring method by five pediatric content experts who were attending physicians in pediatric critical care, neonatology, pediatric emergency medicine, hospital medicine and outpatient medicine. Evaluations were also done in a blinded manner. The median CRAAP scores were compared via the Wilcoxon rank-sum test. Statistical significance was defined as a p-value less than 0.05. Modalities were unblinded after running our analysis.

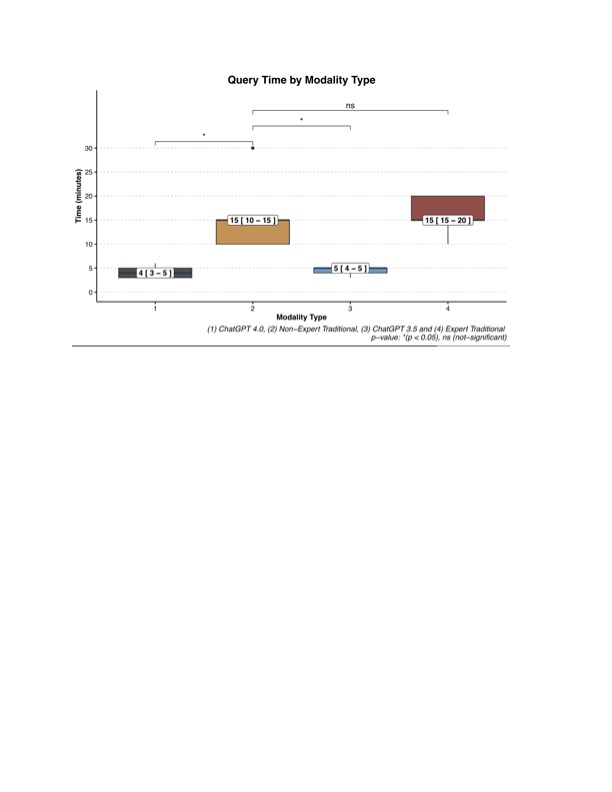

Results: The non-expert traditional search had the highest CRAAP score (Figure 1) which was statistically significant compared to Chat GPT 4.0 (p=0.002) and the expert traditional search (p=0.02). There were no statistically significant differences in the CRAAP scores between the non-expert traditional search and Chat GPT 3.5. Cohen’s Kappa value was 0.01, with a p-value of 0.63, indicating minimal, non-significant agreement between raters. The time to search via Chat GPT 3.5 and 4.0 was notably less than both traditional searches (Figure 2).

Conclusion(s): Our study found the non-expert traditional literature search had the highest quality and reality, significantly outperforming both Chat GPT-4 and expert searches. Non-expert traditional searches outperformed expert traditional searches suggesting clinicians are more accurate at searching for clinically relevant information than experts without a clinical background. Although the search times are longer, our results support the superiority of current search methods in the domains of accuracy and reliability to answer clinical questions when compared to LLMs.

Table 1

Standardized Pediatric-Specific Clinical Research Questions

Standardized Pediatric-Specific Clinical Research QuestionsFigure 1

CRAAP Scores by Modality Type

CRAAP Scores by Modality TypeFigure 2

Query Time by Modality Type

Query Time by Modality TypeTable 1

Standardized Pediatric-Specific Clinical Research Questions

Standardized Pediatric-Specific Clinical Research QuestionsFigure 1

CRAAP Scores by Modality Type

CRAAP Scores by Modality TypeFigure 2

Query Time by Modality Type

Query Time by Modality Type