Technology 1: AI/Machine Learning

Session: Technology 1: AI/Machine Learning

168 - AI-VOICE: a Method to Measure Caregiver Values for AI-triggered Actions

Saturday, April 26, 2025

2:30pm - 4:45pm HST

Publication Number: 168.3858

Gabriel Tse, Stanford University School of Medicine, Palo Alto, CA, United States; Nigam Shah, Stanford University School of Medicine, Palo Alto, CA, United States; Keith Morse, Stanford University School of Medicine, Palo Alto, CA, United States

Gabriel Tse, MD, MS

Clinical Assistant Professor

Stanford University School of Medicine

Palo Alto, California, United States

Presenting Author(s)

Background: Patients and caregivers are the ultimate recipients of the benefits and costs of artificial intelligence (AI) in healthcare, but their involvement in AI development and deployment is limited. A crucial aspect of AI implementation involves deciding the risk threshold for triggering interventions, which involves assessing trade-offs of certain AI-triggered actions. Consider a sepsis prediction algorithm, where misclassifying a neonate as having sepsis (false positive) leads to unnecessary medical interventions, while misclassifying a septic neonate as not having sepsis (false negative) risks catastrophic clinical deterioration. While one outcome is clearly worse, how much worse is a purely subjective judgement. Few tools currently exist to measure these caregiver values. Consequently, these trade-offs are often judged by AI model developers and clinicians who may not accurately represent the values of caregivers.

Objective: Develop a novel method to quantitatively measure caregiver values for outcomes of hypothetical AI-triggered actions.

Design/Methods: A multidisciplinary team of clinicians, data scientists, and software engineers was formed to discuss and develop this method. The purpose, use cases, and must-haves of the method were defined prior to development.

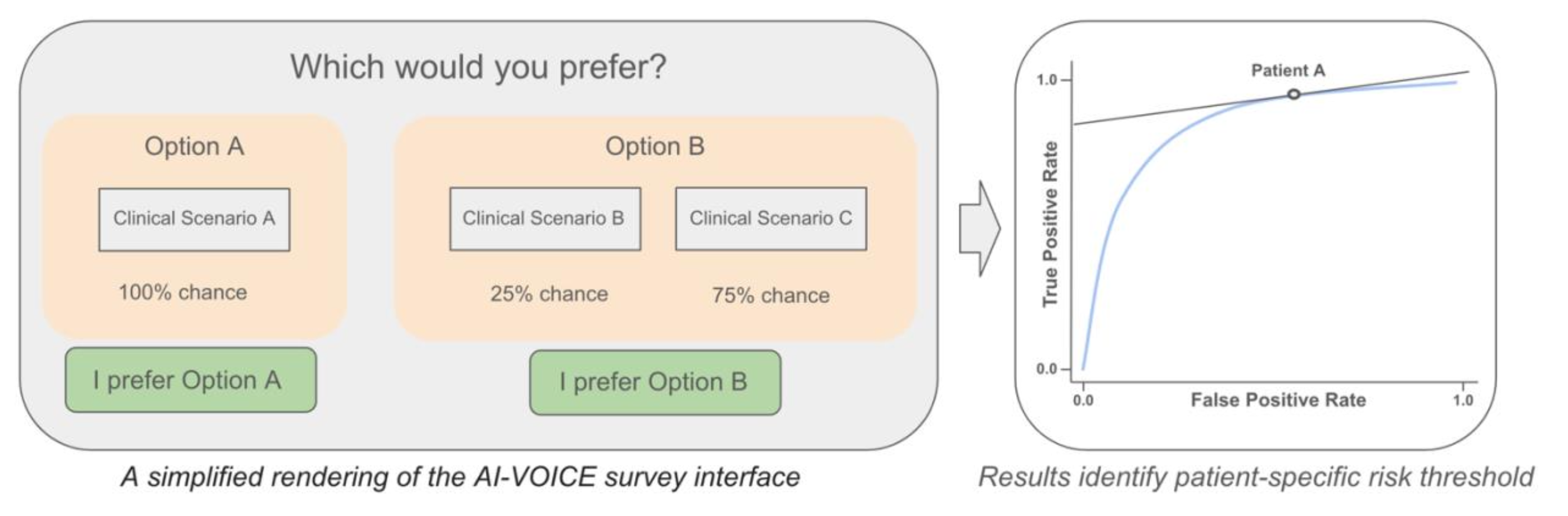

Results: AI-VOICE (Values-Oriented Implementation and Context Evaluation) was developed as a web-based survey tool that measures caregiver values for AI-triggered actions using a standard gamble framework (https://ai-voice.stanford.edu). Each completed survey maps to a point on the AI algorithm’s receiver operating characteristic curve, suggesting a caregiver-optimized risk threshold (Figure 1). The intended use is for health systems that are implementing AI tools into local care delivery processes as part of routine AI model evaluation. It can be used for both clinical and operational AI uses, and it is straightforward enough to be used by health systems without specialized expertise.

Conclusion(s): AI-VOICE is the first tool developed that offers an accessible, quantitative method to incorporate caregiver perspectives of AI-triggered actions and can be used by health systems to fine-tune their AI models to better reflect caregiver values. Incorporating caregiver values into AI-informed actions may improve patient and caregiver trust in using AI for clinical decision support. Future research is needed prior to implementing AI-VOICE, which includes validating this method using real-world AI models and caregivers.

Figure 1