Technology 1: AI/Machine Learning

Session: Technology 1: AI/Machine Learning

169 - Assessing Performance of Large Language Models in Answering Image-Based Neonatology Questions

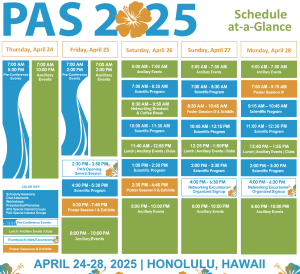

Saturday, April 26, 2025

2:30pm - 4:45pm HST

Publication Number: 169.3931

Ashley Cowan, University of Colorado School of Medicine, Aurora CO, CO, United States; James S. Barry, University of Colorado School of Medicine, centennial, CO, United States

Ashley Cowan, MD (she/her/hers)

Neonatology Fellow

University of Colorado School of Medicine

Aurora CO, Colorado, United States

Presenting Author(s)

Background: ChatGPT, Claude, and Gemini are publicly available large language models (LLMs) that excel at recognizing and generating human-like language. Some LLMs have achieved passing scores on multiple text-based medical licensing exams. Their performance on image-based, board-style questions in neonatology remains underexplored.

Objective: Compare correctness and consistency of ChatGPT-4, Gemini, and Claude on image-based neonatology questions and assess performance with advanced prompts by incorporating role specification and chain-of-thought reasoning.

Design/Methods: This comparative study assessed ChatGPT4o, Claude 3.5 Sonnet, and Gemini 1.5 Flash on 66 image-based neonatology questions available from Neonatology Review: Q&A 3rd edition with permission from the editors. Each model received a simple prompt across three trials, spaced two weeks apart, to assess accuracy and consistency. ChatGPT4o was further tested with advanced prompts using role specification and chain-of-thought reasoning. Accuracy, consistency, and response variance were evaluated using descriptive statistics and chi-square tests.

Results: ChatGPT4o correctly answered 59.6% (± 3.2%) of the 66 questions, followed by Claude at 51% (± 3.2%), both outperformed Gemini at 23.7% (± 5.3%) of the questions. ChatGPT4o and Claude had equal correct response consistency (variance = 4.33), while Gemini showed greater variability (variance = 12.33). ChatGPT4o repeated responses for 46 of 66 questions (70%), Claude for 44 of 66 (67%), and Gemini for 48 of 66 (73%), reflecting relative stability in responses regardless of accuracy. ChatGPT4o and Claude provided unique explanations for each response and only 3% of Gemini’s explanations were identical. In radiology questions, ChatGPT4o answered 73% correctly, Claude 26.7% and Gemini 0%. Advanced prompting increased ChatGPT4o’s average correct response rate to 66.7% (± 5.3%), although this was not statistically significant (χ² = 1.83, p = 0.116). ChatGPT4o demonstrated similar consistency with advanced prompting, repeating answers for 47 of 66 questions (71%).

Conclusion(s): This study reveals that LLMs demonstrate lower accuracy on image-based questions compared to previously reported performances on text-based neonatology questions. Both ChatGPT4o and Claude answered over 50% accuracy and outperformed Gemini. Refined prompting techniques provided only modest performance gains for ChatGPT4o. Further research and prompting approaches will be essential to fully assess the potential of these models as they continue to evolve.