Technology 1: AI/Machine Learning

Session: Technology 1: AI/Machine Learning

181 - The Use of Large Language Models for Summarizing Progress Notes in the Cardiovascular Intensive Care Unit

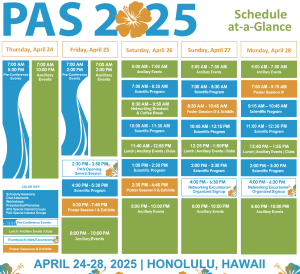

Saturday, April 26, 2025

2:30pm - 4:45pm HST

Publication Number: 181.6985

Vanessa Klotzman, CHOC Children's Hospital of Orange County, Los Angeles, CA, United States; Louis Ehwerhemuepha, CHOC Children's Hospital of Orange County, Orange, CA, United States; Robert B.. Kelly, CHOC Children's, Orange, CA, United States

- VK

Vanessa Klotzman, MS (she/her/hers)

Graduate Intern

CHOC Children's Hospital of Orange County

Los Angeles, California, United States

Presenting Author(s)

Background: Effective communication of patient information is crucial in critical care settings, such as the Cardiovascular Intensive Care Unit (CVICU), to ensure patient safety and continuity of care. However, clinical notes from various medical professionals can be lengthy and complex, creating barriers to efficient information transfer. Summarization using advanced language models, like the Mistral Artificial Intelligence (AI) Large Language Model (LLM), offers a potential solution to streamline information.

Objective: This study evaluates the effectiveness of the Mistral AI model in summarizing clinical notes from the CVICU, focusing on readability, accuracy, and information retention compared to concatenated original notes. The I-PASS tool (AHRQ), a structured communication framework for patient handoff, was used in generating summaries from CVICU progress notes.

Design/Methods: A sample of 385 clinical progress notes from patients in a CVICU were used. Notes across all shifts (for each patient’s stay) were concatenated to create a single text. Summaries were generated using the Mistral AI model. Readability was assessed using metrics such as Flesch Reading Ease, Flesch-Kincaid Grade Level, Gunning Fog Index, SMOG Index, Automated Readability Index, and Dale-Chall Score. Additionally, cosine similarity scores were calculated to gauge alignment between summaries and original notes.

Results: The analysis indicates that AI-generated summaries are more challenging to read than the original concatenated notes, with a Flesch Reading Ease score of 29.25 compared to 56.89 for original notes. Summaries require a college-level reading ability (Flesch-Kincaid Grade 15.24), whereas original notes are accessible at a 9th-grade level (Flesch-Kincaid Grade 8.98). A cosine similarity score of 0.6 suggests moderate alignment, indicating that while key information is retained, some details may be simplified or omitted.

Conclusion(s): The Mistral AI model effectively condenses complex clinical information, though at a readability level suited for trained professionals. While promising for supporting summarization in critical care, further refinement is needed to enhance readability without losing essential content. Future analyses will include a group of providers who will manually annotate the summaries to provide clinician-based metrics for quality of generated summaries.