Technology 1: AI/Machine Learning

Session: Technology 1: AI/Machine Learning

182 - Vision Image Transformers Improve Anatomic Landmark Tracking from Video Data on Infants in an Intensive Care Unit Setting

Saturday, April 26, 2025

2:30pm - 4:45pm HST

Publication Number: 182.7089

Florian Richter, Artemis.AI, La Jolla, CA, United States; Alec Gleason, Albert Einstein College of Medicine, East Brunswick, NJ, United States; Mark Waechter, Artemis.AI, Chicago, IL, United States; Katherine Guttmann, Icahn School of Medicine at Mount Sinai, New York, NY, United States; Girish Nadkarni, Icahn School of Medicine at Mount Sinai, New York, NY, United States; Madeline Fields, Icahn School of Medicine at Mount Sinai, New York, NY, United States; Felix Richter, The Mount Sinai Kravis Children's Hospital, New York City, NY, United States

Felix Richter, MD, PhD (he/him/his)

Hospitalist and Instructor

The Mount Sinai Kravis Children's Hospital

New York City, New York, United States

Presenting Author(s)

Background: Neonatal movement abnormalities may reflect lethargy, irritability, or neurologic pathology like seizures and encephalopathy. Pose AI, a computer vision method to track anatomic landmarks from videos, is a promising tool to monitor infant movement. However, prior work used decade-old algorithms with limited ability to detect subtle, fast, or complex movements.

Objective: We hypothesized that vision image transformers (ViT) could improve infant pose AI landmark detection. Similar methods power other AI advances like ChatGPT.

Design/Methods: We retrospectively collected video EEG data from infants with corrected age ≤1 year. We labeled up to 14 anatomic landmarks/frame in 25 frames/infant. We used these data to train a custom Pose ViT model optimized for infants. The backbone for our algorithm is Meta/Facebook AI Research’s DINOv2-G, a 1.1 billion parameter model trained on 142 million images. We appended three deconvolution layers with 14 landmark heatmap predictions, fine-tuned with a Low-Rank Adapter, and improved robustness with AutoAugment. We evaluated performance with L2 pixel error, area under receiver operating characteristic curves (ROC-AUCs), and percent of key points within a reference distance threshold (PCK). A PCK >90% is comparable to state-of-the-art adult algorithms. We benchmarked against published infant Pose AI results that used a ResNet model from 2015.

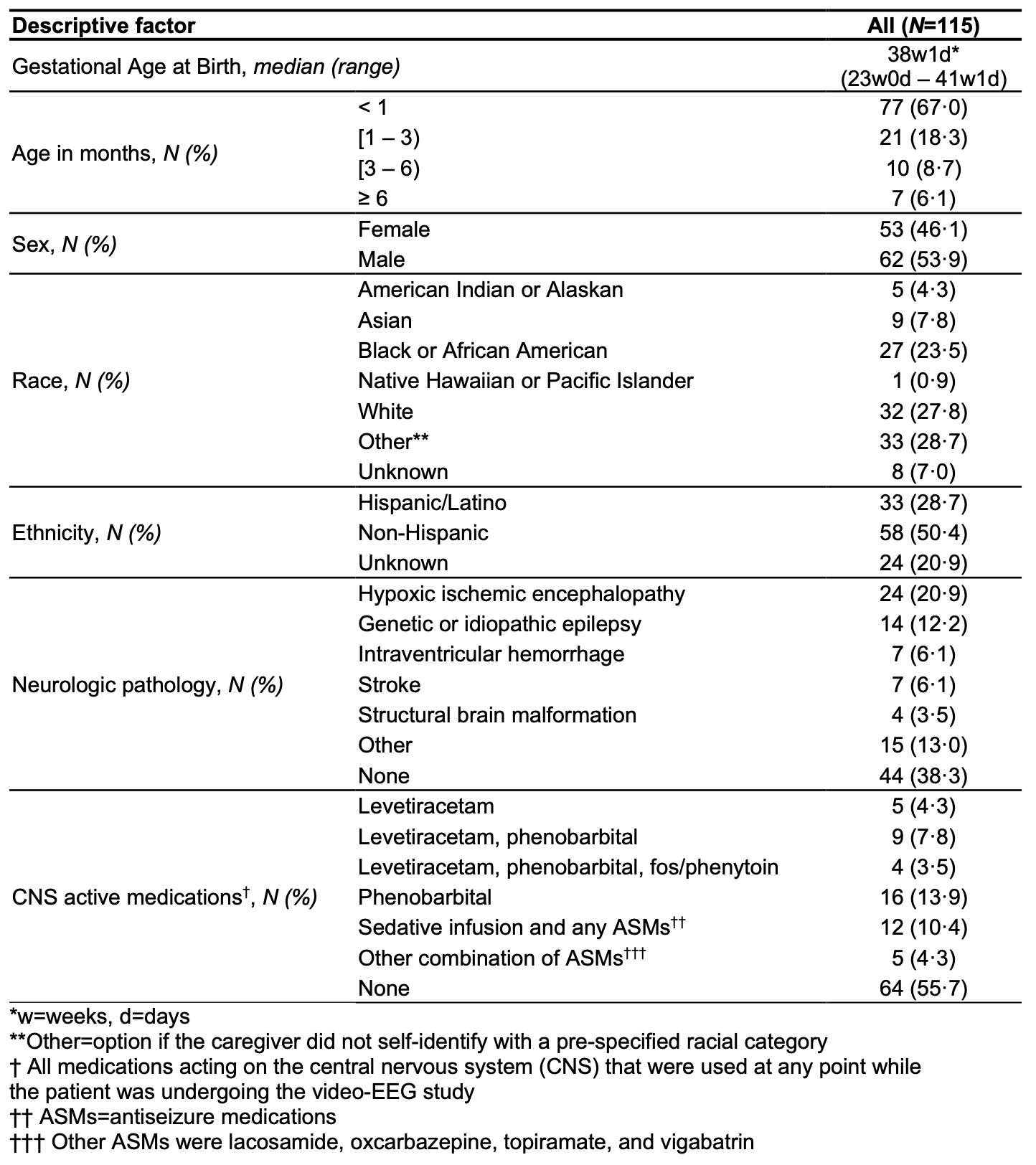

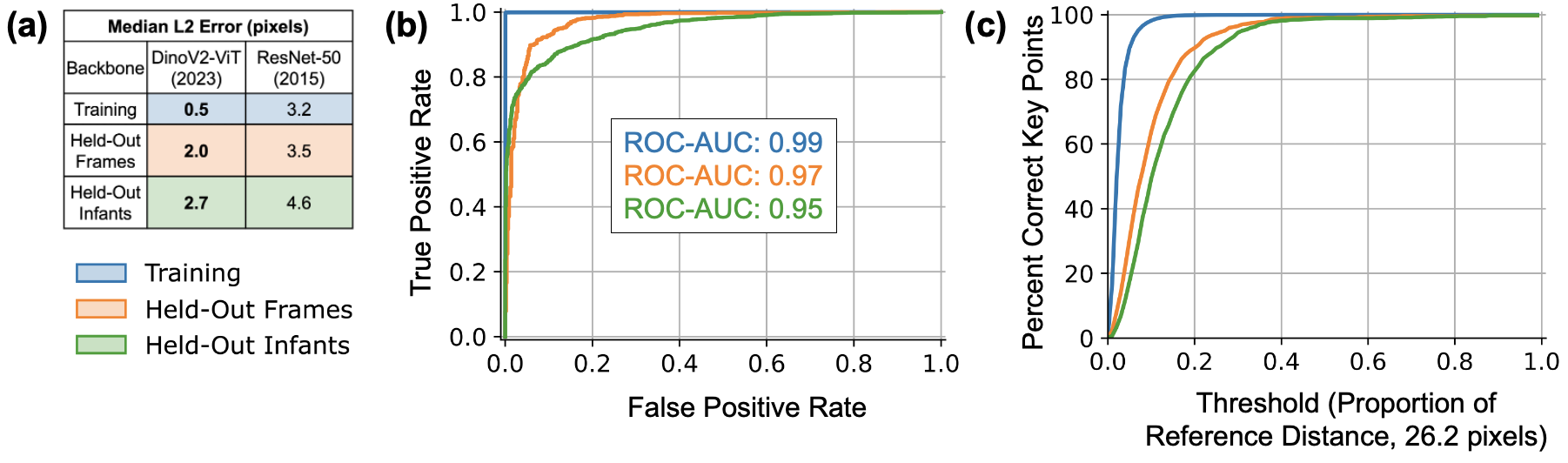

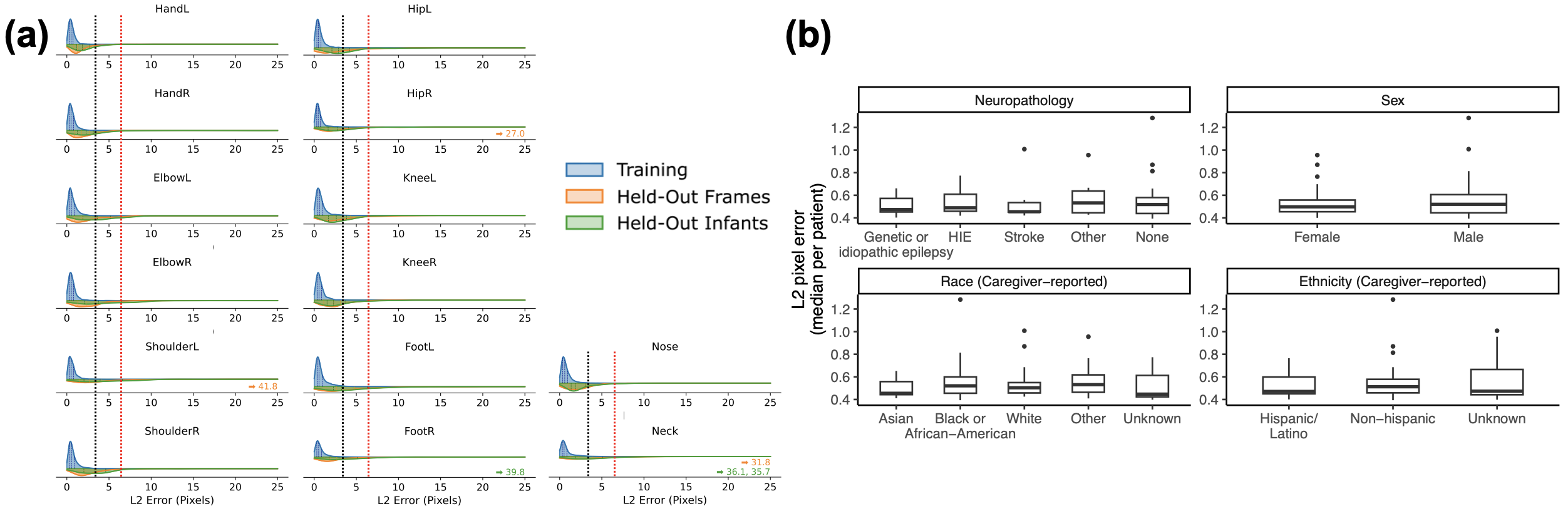

Results: We analyzed the largest published video-EEG dataset to date (282,301 video minutes, 115 infants) (Table 1). Most infants were term, < 1 month corrected age, and with neurologic pathology. Pose ViT had high performance across three evaluation datasets, with low L2 error 0.5–2.7 pixels, high ROC-AUCs 0.95–0.99, and high PCK >98% (Fig 1). Furthermore, compared to prior work, we observed a 6-fold improvement in extreme error rate of >25 pixels (Fisher’s P=3x10^-6). The pixel error was also 10-fold lower than human inter-rater variability with similar labeling tools. Pose ViT performance was consistent across all anatomic landmarks and was not significantly different between demographic groups (Fig 2).

Conclusion(s): We demonstrate that Pose ViT can accurately identify anatomic landmarks on infants in their “natural” ICU setting. We found significant improvement compared to prior work. We also observed consistent performance across multiple demographic characteristics including caregiver-reported race/ethnicity, addressing important concerns about bias and generalizability of computer vision AI. Future applications include video monitoring for sedation, cerebral dysfunction, feeding intolerance, developmental delay, and seizures.

Table 1. Demographic and clinical characteristics of patients in this study.

Figure 1. Pose AI using vision image transformers (ViT) had high performance as measured by three metrics on three evaluation datasets.

(a) Median L2 pixel error, the distance between predicted and manually labeled landmarks, in our ViT model (using DinoV2) was better than a recently published infant Pose AI model that used the decade-old ResNet backbone. (b) ROC-AUCs, a measure of ability to correctly predict anatomic landmark occlusion, were high on three evaluation datasets and higher than previously published results, which ranged 0.89–0.94. (c) The percentage of correct key points (PCK), which is the proportion of points within a reference distance threshold (26.2 pixels in our data), was >98%, comparable to state-of-the-art pose algorithms in adults.

(a) Median L2 pixel error, the distance between predicted and manually labeled landmarks, in our ViT model (using DinoV2) was better than a recently published infant Pose AI model that used the decade-old ResNet backbone. (b) ROC-AUCs, a measure of ability to correctly predict anatomic landmark occlusion, were high on three evaluation datasets and higher than previously published results, which ranged 0.89–0.94. (c) The percentage of correct key points (PCK), which is the proportion of points within a reference distance threshold (26.2 pixels in our data), was >98%, comparable to state-of-the-art pose algorithms in adults.Figure 2. Comparison of performance of Pose AI with ViT between anatomic landmarks and across demographic groups.

(a) L2 pixel error, the distance between predicted and manually labeled landmarks, is shown as violin plots. It was consistently low across all labeled landmarks for frames from training data (blue, median 0.54 pixels, N=22,319), frames held out from training (orange, median 2.0 pixels, N=974), and frames from infants held out from training (green, median 2.7 pixels, N=1399). The best L2 pixel error performance using the older ResNet architecture from 2015 on the same data is shown as a vertical black dashed line (3.2 pixels). Previously reported human inter-rater variability, standardized to our camera resolution, is shown as a vertical red dashed line (6.3 pixels). Six labeled landmarks (0.03%) had an extremely high L2 error (>25 pixels) and their errors are shown with arrows; this is lower than the extreme error rate with older models (0.1%, Fisher’s P=3x10^-6). (b) The L2 pixel error, shown as box plots with the median and 25th/75th quartiles, was not statistically significantly different within neurologic pathology, sex, and caregiver-reported race/ethnicity (all four Kruskal-Wallis P>0.3).

(a) L2 pixel error, the distance between predicted and manually labeled landmarks, is shown as violin plots. It was consistently low across all labeled landmarks for frames from training data (blue, median 0.54 pixels, N=22,319), frames held out from training (orange, median 2.0 pixels, N=974), and frames from infants held out from training (green, median 2.7 pixels, N=1399). The best L2 pixel error performance using the older ResNet architecture from 2015 on the same data is shown as a vertical black dashed line (3.2 pixels). Previously reported human inter-rater variability, standardized to our camera resolution, is shown as a vertical red dashed line (6.3 pixels). Six labeled landmarks (0.03%) had an extremely high L2 error (>25 pixels) and their errors are shown with arrows; this is lower than the extreme error rate with older models (0.1%, Fisher’s P=3x10^-6). (b) The L2 pixel error, shown as box plots with the median and 25th/75th quartiles, was not statistically significantly different within neurologic pathology, sex, and caregiver-reported race/ethnicity (all four Kruskal-Wallis P>0.3).