Emergency Medicine 8

Session: Emergency Medicine 8

109 - Autonomous Early Retrieval of Medical Histories Using ChatGPT

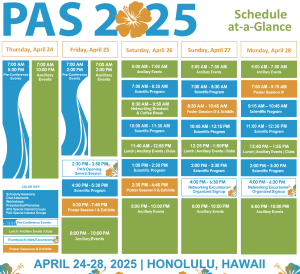

Sunday, April 27, 2025

8:30am - 10:45am HST

Publication Number: 109.3960

Vidya Raghavan, Boston Children's Hospital, Boston, MA, United States; Yuval Barak-Corren, Boston Children's Hospital, Cambridge, MA, United States; Alexandra Geanacopoulos, Boston Children's Hospital, Boston, MA, United States; Alessio Morley-Fletcher, Boston Children's Hospital, Boston, MA, United States; Mark Waltzman, Harvard Medical School, Boston, MA, United States; Andrew M.. Fine, Boston Children's Hospital, Boston, MA, United States

- VR

Vidya R. Raghavan, MD (she/her/hers)

Attending Physician

Boston Children's Hospital

Boston, Massachusetts, United States

Presenting Author(s)

Background: Emergency Department (ED) crowding is a public health crisis that negatively impacts patient safety, quality of care, and clinician burnout. New generative artificial intelligence (AI) large language models, like ChatGPT, can potentially improve efficiency and reduce the burden on healthcare providers.

Objective: The study aims to assess whether patients and families are open to an AI-based medical interaction, as well as the usability, accuracy, completeness, efficiency, and readability of AI summaries that are generated from direct patient-AI interaction.

Design/Methods: We identified a convenience sample of children (or parents) presenting to the emergency department with non-acute conditions (ESI score 3-5). Eligible patients were enrolled in the waiting room after informed consent was obtained. Participants first completed a baseline survey to elicit any prior experience with ChatGPT. They then interacted with ChatGPT on a tablet through dictation or typing to provide the HPI, based on a standard prompt. The summary was then shown to the participant who ranked the quality of the output on a 10-point Likert scale. After the ED encounter concluded, the ChatGPT summary was compared with the attending and trainee HPIs for the following metrics: usability, acceptability, accuracy, completeness, efficiency, and readability. Two pediatric emergency medicine attendings assessed the generated summary for all metrics on a 10-point Likert scale.

Results: We approached 12 subjects, of whom, 9 agreed to participate in the study. Four of the nine had never heard of or never used ChatGPT. The participants were age 1 to 22 years with a median age of 11 years and 66% were female. The interaction with ChatGPT was brief and completed within 5 minutes. The average rating of the generated summary by participants was 8.5 on a 10-point Likert scale. Attending assessments compared the ChatGPT with trainee and attending HPI for aspects of accuracy, completeness, efficiency, and readability with an average score of 8.6 across domains. Scores between attendings were within 1 point on a 10-point Likert scale for 80% of the responses. ChatGPT summaries scored lower for patients with chronic symptoms suggesting that they may augment, but not replace a clinician history.

Conclusion(s): Large language models may provide a complementary means to obtain histories while patients wait in the emergency department.