General Pediatrics 1

Session: General Pediatrics 1

700 - Prompting generative artificial intelligence programs to create plain language information about acute pediatric conditions

Friday, April 25, 2025

5:30pm - 7:45pm HST

Publication Number: 700.6511

Sean M. Frey, University of Rochester School of Medicine and Dentistry, Rochester, NY, United States; Andrea Milne Wenderlich, Golisano Children's Hospital at The University of Rochester Medical Center, Rochester, NY, United States; Crystal Craig, University of Rochester School of Medicine and Dentistry, Rochester, NY, United States; Shonna Yin, NYU Grossman School of Medicine, New York, NY, United States; Guillermo Montes, St. John Fisher University, Rochester, NY, United States

- SF

Sean M. Frey, MD, MPH (he/him/his)

Associate Professor

University of Rochester School of Medicine and Dentistry

Rochester, New York, United States

Presenting Author(s)

Background: Prior research has explored the potential for generative artificial intelligence (AI) programs to create written education materials for patients. However, the reading level of AI-produced content typically exceeds the 6th grade target recommended by health literacy experts. Effective prompts are needed for AI programs to produce information in plain language that can be easily read and understood by patients.

Objective: To examine informational materials aimed at caregivers about the home care of acute pediatric conditions from both existing sources and generative AI programs, and assess whether an advanced AI prompt incorporating health literacy recommendations results in information that is easier for caregivers to read and use.

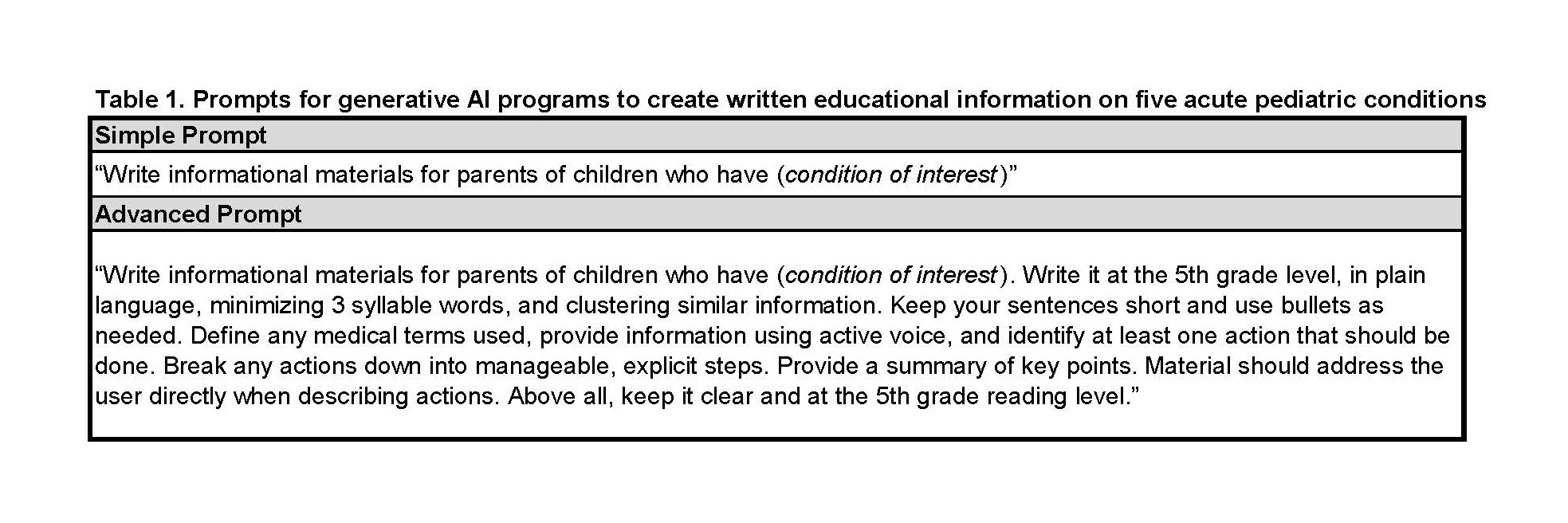

Design/Methods: We analyzed educational information about home care management for five common acute pediatric conditions (fever, sore throat, diarrhea, fainting, and bronchiolitis). Six documents were assessed for each condition, including two from existing sources (HealthyChildren.org, UpToDate), two created by generative AI (ChatGPT 4.0, Gemini) using a basic prompt, and two created by generative AI using an advanced, more detailed prompt (Table 1). We determined mean grade level of the documents using a panel of seven readability indices, analyzed readability data using multivariate analysis of variance (MANOVA), and inspected the Tukey post hoc homogenous groups. Next, we assessed usability with the Patient Education Materials Assessment Tool (PEMAT), with domains of understandability and actionability (scale: 0-100%). Two general pediatricians generated PEMAT scores after documents were randomized and had identifiers removed. We calculated mean PEMAT scores for each document type, and inter-rater reliability using Cohen’s Kappa.

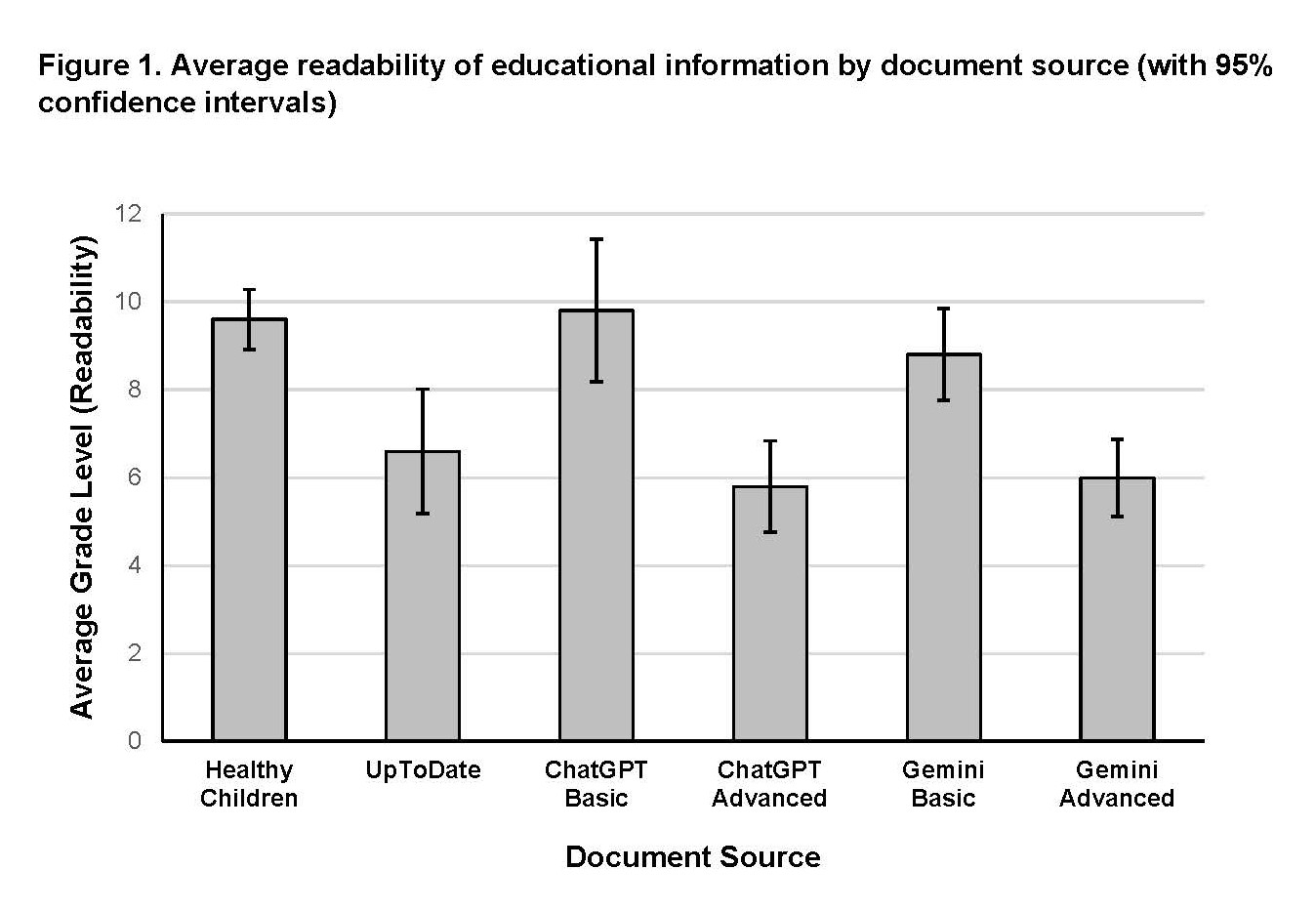

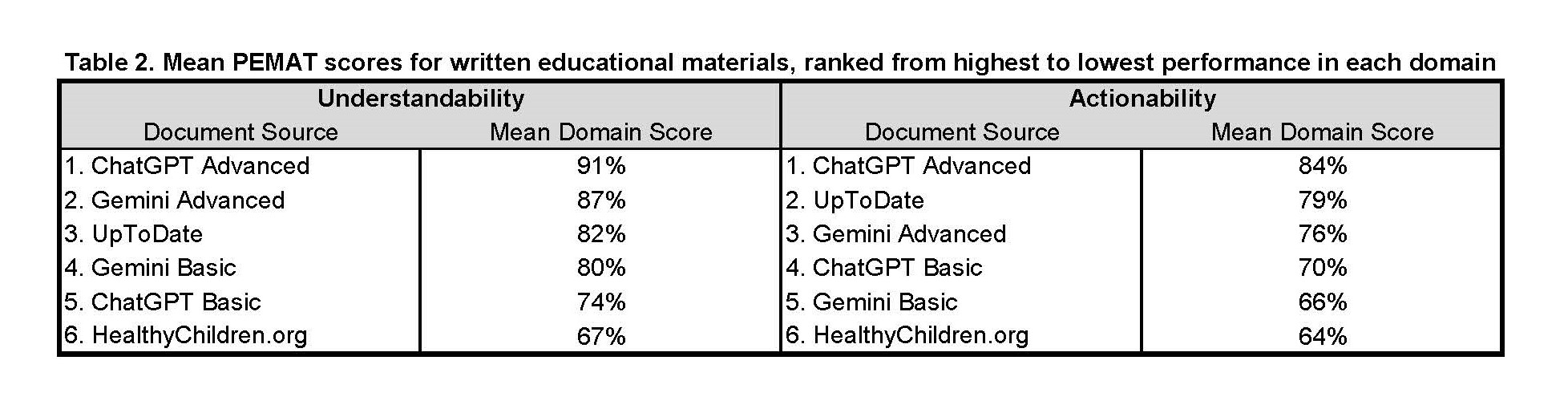

Results: The advanced prompt in ChatGPT resulted in information with the lowest mean reading level (5.8) and highest scores for understandability and actionability (Table 2). The average readability of documents from both AI programs (advanced prompt) and UpToDate achieved the reading level goal of ≤6th grade. The advanced prompt resulted in significantly better readability from AI engines than the basic prompt (Figure 1). There was substantial inter-rater agreement for understandability (kappa=0.78) and actionability (kappa=0.70).

Conclusion(s): A novel advanced prompt for generative AIs created free educational information on pediatric conditions that is readable, understandable, and actionable. Additional analysis is needed to ensure the accuracy of information resulting from the advanced prompt.

Table 1. Prompts for generative AI programs to create written educational information on five acute pediatric conditions.

Table 2. Mean PEMAT scores for written educational materials, ranked from highest to lowest performance in each domain

Figure 1. Average readability of educational information by document source (with 95% confidence intervals)